I may be a old man yelling at the clouds, but I still think programming skills are going nowhere. He seems to bet his future on his ‘predictions’

The only thing AI will replace is small, standalone scripts/programs. 99% of stuff companies actually write is highly integrated with existing code, special (sometimes obsolete) frameworks, open and closed source. The things they throw at you in interviews is generic scripts because they aren’t telling you to read through 6k lines of korn shell scripts to actually understand and write their code. And they won’t have you read and understand millions of lines of code, configs and makefiles to implement something new, in the interview.

He basically cheated on his little brother in Mario Kart and thinks he can now beat Verstappen on the real track. Bro needs to get a reality check in an actual work environment. Especially in a large company.

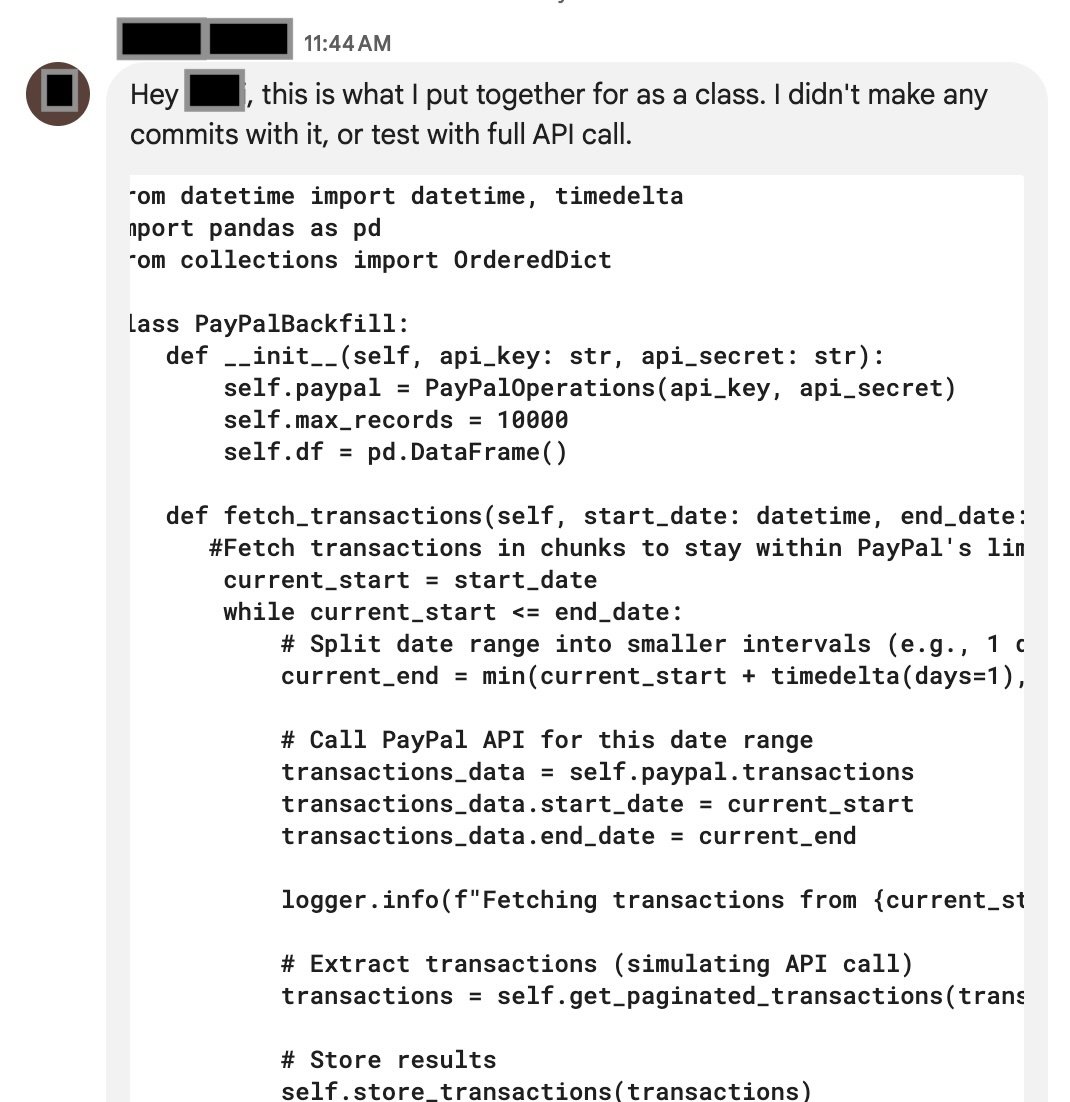

I recently needed to implement some batch processing logic in our code to account for some api level restrictions (the code already pulls from the api in pages and date ranges, but if I specify a date range too wide or a batch that would get too many records back, it gets rejected, so we need to break it down and run the date range in batches). I tell this junior developer what the issue is, and what we need to add to the existing class in our codebase. I follow up with him after a week, and this is what he sends me.

Boilerplate code from chatgpt that has almost nothing to do with what we discussed. And how can you even give me a whole ‘working’ code without even testing it? He didn’t even clone our original repo and test it as is to understand why we need what we need. AI sure is making programmers dumb.

I would argue that he is not a programmer

Yeah, this is the impression I got when he talked about spending so much time training for the problems, especially the bit where he said it was all about hoping you’ve already seen and memorized the problems while pretending it’s the first time you’ve seen them. That’s the whole point of obscure problems like that: to show how you can handle a new problem.

I’ve interviewed for technical positions and I don’t even really care if you get the right answer as much as I care about how you approach the problem.

Shit like this will just make it harder to figure out who the real programmers are and separate them from the people who are only there because they know tech skills means money but didn’t actually develop any tech skills because they were too busy gaming the system. I don’t want to hire someone who spent hours memorizing things they think I want regurgitated on command. I want to hire someone who can understand the overall picture of what’s going on and what needs to be done because it’s interesting to them.

Naw just a Junior that needs guidance.

The junior devs I’ve worked with don’t just send me raw ChatGPT output.

Does it least actually have something to do with PayPal? And is pandas actually useful? lol

I can understand commenting ASM, optimized C code can use some comments too, but commenting python should be a crime, as its function calls document themselves, and you’ll usually split data over named variables anyway.

Only chatGPT has these kinds of comments as if you’re seeing code for the first time. 😆

I’m not against adding comments where is needed: in the company I work for (a big bank) my team takes care of a few modules and we added comments on one class that is responsible to make some very custom UI component with lots of calculations and low level manipulations. It’s basically a team of seniors and no one was against that monster having comments to explain what it was doing in case we had to go back and change something.

For 99% of the code you just need to have good names though.

Exactly what I’m saying. Also in a big bank btw :3

The only thing AI will replace is small, standalone scripts/programs.

For now. Eventually, I’d expect LLMs to be better at ingesting the massive existing codebase and taking it into account and either planning an approach or spitting out the first iteration of code. Holding large amounts of a language in memory and adding to it is their whole thing.

Hopefully we can fix the energy usage first.

I have a feeling that the Dyson sphere will end up powering only the AI of the world and nothing else.

They can context and predict contextually relevant symbols, but will they ever do so in a way that is consistently meaningful and accurate? I personally don’t think so because an LLM is not a machine capable of logical reasoning. LLMs hallucinating is just them making bad predictions, and I don’t think we’re going to fix that regardless of the amount of context we give them. LLMs generating useful code in this context is like asking for perfect meteorological reports with our current understanding of weather systems in my opinion. It feels like any system capable of doing what you suggest needs to be able to actually generate its own model that matches the task it’s performing.

Or not. I dunno, I’m just some idiot software dev on the internet who only has a small amount of domain knowledge and who shouldn’t be commenting on shit like this without having had any coffee.

I don’t think they necessarily need logical reasoning. Solid enough test cases, automated test plans, and the ability to use trial & error rapidly means that they can throw a bunch of stuff at the wall and release whatever sticks.

I’ve already seen some crazy stuff setup just with a customized model connected to a bunch of ADO pipelines that can shit out reasonably functional code, test, and release it autonomously. It’s front-ended by a chatbot, where the devs can provide a requested tweak in plan English and have their webapp updated in a few minutes. Right now, there’s a manual review/approval process in place, but this is using commodity shit in 2025. Imagine describing that scenario to someone in 2015 and tell me we can accurately predict the limitations there will be in 2035, '45, etc.

I don’t think the industry’s disappearing anytime soon, but I do think we’ll see AI eating up some of the offshore/junior/mid-level work before I get to retire.

Honestly, Columbia’s reaction feels less about ethics and more about damage control. Lee didn’t cheat to land a job — he built an AI tool to expose how broken Big Tech’s hiring process is. Ironically, that shows the kind of problem-solving and innovation those companies claim to value.

What’s troubling is the university’s overreach. His actions had nothing to do with his academic work, yet Columbia is stepping in — not to uphold integrity, but seemingly to protect its reputation. It looks like a “Bauernopfer” — making an example of Lee to scare other students away from using AI tools.

But there’s a deeper issue: universities are losing their grip on being the sole gatekeepers of knowledge. AI and open-access information are disrupting traditional models, and instead of adapting, institutions are doubling down on outdated rules. This isn’t about Lee’s tool — it’s about “Geltungsverlust” — the fear that their authority is slipping in a world where students can bypass conventional performance measuring schemes.

– Generated out of discussion with 4o as from a european standpoint I can’t understand why the uni was even allowed to act

Should have read it to the end and totally agree

“It’s an attempt at a standardized test that measures problem solving, but in today’s world that’s just obsolete.”

The interview process being broken doesn’t mean the job is broken/useless. It’s shitty IMO that Columbia university wants to take “disciplinary action” for exposing useless interviewing practices. It would’ve been better to tell Roy about ethics and say “If you want to do this kind of stuff, that’s fine, but here’s the way to do it. In fact you can continue doing this at our university and make a career out of it. Let’s talk.”

And Amazon’s reaction is also dumb (as expected). They should instead be hiring this dude to improve their interviewing practices by letting him build internal tooling to try and defeat the interviewing process. Of course they won’t do this because there is a certain prestige in getting a FAANG job. Keeping recruiting costs low but interest high with a high rejection rate is the goal for recruiting.

I’m not quite sure there’s a winner in this triangle, except those outside it: this might force companies to change their recruitment practices. My guess is that there will be a lot of resistance first and more money will be spent on “fraud detection” than actual improvements. It will be a cat and mouse game with probably the companies losing, but at least some C-suite bastard will get rich selling the fraud detection solution 🤷